Apache Kafka

The Sysdig agent automatically collects metrics from Kafka via JMX polling. You need to provide consumer names and topics in the agent config file to collect consumer-based Kafka metrics.

This page describes the default configuration settings, how to edit the configuration to collect additional information, the metrics available for integration, and a sample result in the Sysdig Monitor UI.

Kafka Setup

Kafka will automatically expose all metrics. You do not need to add anything on the Kafka instance.

Zstandard, one of the compressions available in the Kafka integration,

is only included in Kafka versions 2.1.0 or newer. See also Apache

documentation.

Sysdig Agent Configuration

Review how to edit dragent.yaml to Integrate or Modify Application Checks.

Metrics from Kafka via JMX polling are already configured in the agent’s

default-settings configuration file. Metrics for consumers, however,

need to use app-checks to poll the Kafka and Zookeeper API. You need to

provide consumer names and topics in dragent.yaml file.

Default Configuration

Since consumer names and topics are environment-specific, a default

configuration is not present in dragent.default.yaml.

Refer to the following examples for adding Kafka checks to

dragent.yaml.

Remember! Never edit dragent.default.yaml directly; always edit

only dragent.yaml.

Example 1: Basic Configuration

A basic example with sample consumer and topic names:

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_connect_str: "127.0.0.1:9092" # kafka address, usually localhost as we run the check on the same instance

zk_connect_str: "localhost:2181" # zookeeper address, may be different than localhost

zk_prefix: /

consumer_groups:

sample-consumer-1: # sample consumer name

sample-topic-1: [0, ] # sample topic name and partitions

sample-consumer-2: # sample consumer name

sample-topic-2: [0, 1, 2, 3] # sample topic name and partitions

Example 2: Store Consumer Group Info (Kafka 9+)

From Kafka 9 onwards, you can store consumer group config info inside Kafka itself for better performance.

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_connect_str: "localhost:9092"

zk_connect_str: "localhost:2181"

zk_prefix: /

kafka_consumer_offsets: true

consumer_groups:

sample-consumer-1: # sample consumer name

sample-topic-1: [0, ] # sample topic name and partitions

If kafka_consumer_offsets entry is set to true the app check will

look for consumer offsets in Kafka. The appcheck will also look in Kafka

if zk_connect_str is not set.

Example 3: Aggregate Partitions at the Topic Level

To enable aggregation of partitions at the topic level, use

kafka_consumer_topics with aggregate_partitions = true.

In this case the app check will aggregate the lag & offset values at

the partition level, reducing the number of metrics collected.

Set aggregate_partitions = false to disable metrics aggregation at

the partition level. In this case, the appcheck will show lag and

offset values for each partition.

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_connect_str: "localhost:9092"

zk_connect_str: "localhost:2181"

zk_prefix: /

kafka_consumer_offsets: true

kafka_consumer_topics:

aggregate_partitions: true

consumer_groups:

sample-consumer-1: # sample consumer name

sample-topic-1: [0, ] # sample topic name and partitions

sample-consumer-2: # sample consumer name

sample-topic-2: [0, 1, 2, 3] # sample topic name and partitions

Example 4: Custom Tags

Optional tags can be applied to every emitted metric, service check, and/or event.

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_connect_str: "localhost:9092"

zk_connect_str: "localhost:2181"

zk_prefix: /

consumer_groups:

sample-consumer-1: # sample consumer name

sample-topic-1: [0, ] # sample topic name and partitions

tags: ["key_first_tag:value_1", "key_second_tag:value_2", "key_third_tag:value_3"]

Example 5: SSL and Authentication

If SSL and authentication are enabled on Kafka, use the following configuration.

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_consumer_offsets: true

kafka_connect_str: "127.0.0.1:9093"

zk_connect_str: "localhost:2181"

zk_prefix: /

consumer_groups:

test-group:

test: [0, ]

test-4: [0, 1, 2, 3]

security_protocol: SASL_SSL

sasl_mechanism: PLAIN

sasl_plain_username: <USERNAME>

sasl_plain_password: <PASSWORD>

ssl_check_hostname: true

ssl_cafile: <SSL_CA_FILE_PATH>

#ssl_context: <SSL_CONTEXT>

#ssl_certfile: <CERT_FILE_PATH>

#ssl_keyfile: <KEY_FILE_PATH>

#ssl_password: <PASSWORD>

#ssl_crlfile: <SSL_FILE_PATH>

Configuration Keywords and Descriptions

Keyword | Description | Default Value |

|---|---|---|

| Protocol used to communicate with brokers. |

|

| String picking SASL mechanism when | Currently only |

| Username for SASL | |

| Password for SASL | |

| Pre-configured SSLContext for wrapping socket connections. If provided, all other | none |

| Flag to configure whether SSL handshake should verify that the certificate matches the broker's hostname. | true |

| Optional filename of ca file to use in certificate veriication. | none |

| Optional filename of | none |

| Optional filename containing the client private key. | none |

| Optional password to be used when loading the certificate chain. | none |

| Optional filename containing the CRL to check for certificate expiration. By default, no CRL check is done. When providing a file, only the leaf certificate will be checked against this CRL. The CRL can only be checked with 2.7.9+. | none |

Example 6: Regex for Consumer Groups and Topics

As of Sysdig agent version 0.94, the Kafka app check has added optional regex (regular expression) support for Kafka consumer groups and topics.

Regex Configuration:

No new metrics are added with this feature

The new parameter

consumer_groups_regexis added, which includes regex for consumers and topics from Kafka. Consumer offsets stored in Zookeeper are not collected.Regex for topics is optional. When not provided, all topics under the consumer will be reported.

The regex Python syntax is documented here:

https://docs.python.org/3.7/library/re.html#regular-expression-syntaxIf both

consumer_groupsandconsumer_groups_regexare provided at the same time, matched consumer groups from both parameters will be merged

Sample configuration:

app_checks:

- name: kafka

check_module: kafka_consumer

pattern:

comm: java

arg: kafka.Kafka

conf:

kafka_connect_str: "localhost:9092"

zk_connect_str: "localhost:2181"

zk_prefix: /

kafka_consumer_offsets: true

# Regex can be provided in following format

# consumer_groups_regex:

# 'REGEX_1_FOR_CONSUMER_GROUPS':

# - 'REGEX_1_FOR_TOPIC'

# - 'REGEX_2_FOR_TOPIC'

consumer_groups_regex:

'consumer*':

- 'topic'

- '^topic.*'

- '.*topic$'

- '^topic.*'

- 'topic\d+'

- '^topic_\w+'

Example

Regex | Description | Examples Matched | Examples NOT Matched |

|---|---|---|---|

topic_\d+ | All strings having keyword topic followed by _ and one or more digit characters (equal to [0-9]) | my-topic_1 topic_23 topic_5-dev | topic_x my-topic-1 topic-123 |

topic | All strings having topic keyword | topic_x x_topic123 | xyz |

consumer* | All strings have | consumer-1 sample-consumer sample-consumer-2 | xyz |

^topic_\w+ | All strings starting with topic followed by _ and any one or more word characters (equal to [a-zA-Z0-9_]) | topic_12 topic_x topic_xyz_123 | topic-12 x_topic topic__xyz |

^topic.* | All strings starting with | topic-x topic123 | x-topic x_topic123 |

.*topic$ | All strings ending with | x_topic sampletopic | topic-1 x_topic123 |

Metrics Available

Kafka Consumer Metrics (App Checks)

See Apache Kafka Consumer Metrics.

JMX Metrics

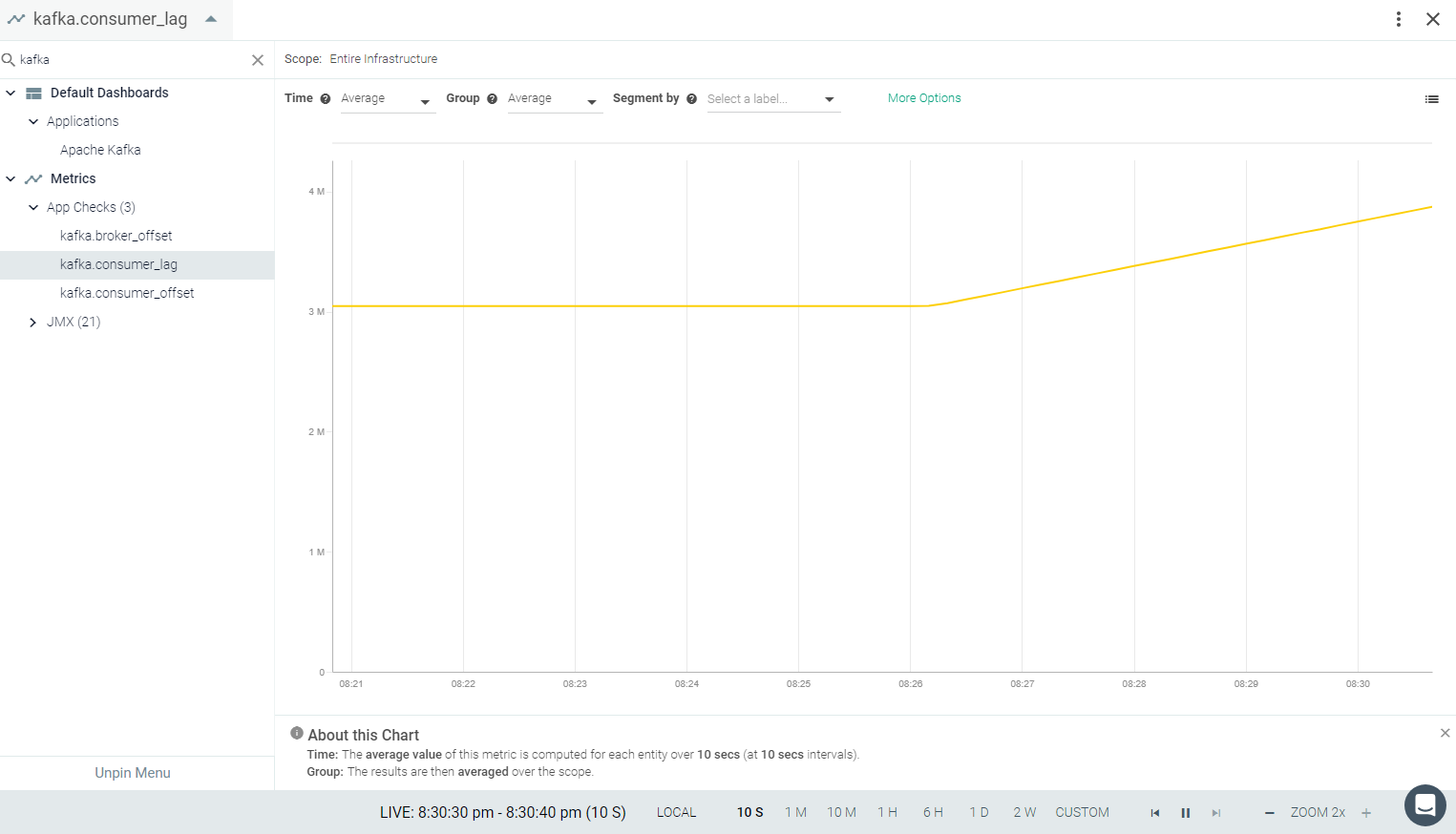

Result in the Monitor UI