Legacy PromQL Alerts

Sysdig Monitor enables you to use PromQL to define metric expressions that you can alert on. You define the alert conditions using the PromQL-based metric expression. This way, you can combine different metrics and warn on cases like service-level agreement breach, running out of disk space in a day, and so on.

Examples

For PromQL alerts, you can use any metric that is available in PromQL, including Sysdig native metrics. For more details see the various integrations available on promcat.io.

Low Disk Space Alert

Warn if disk space falls below a specified quantity. For example disk space is below 10GB in the 24h hour:

predict_linear(sysdig_fs_free_bytes{fstype!~"tmpfs"}[1h], 24*3600) < 10000000000

Slow Etcd Requests

Notify if etcd requests are slow. This example uses the

promcat.io integration.

histogram_quantile(0.99, rate(etcd_http_successful_duration_seconds_bucket[5m]) > 0.15

High Heap Usage

Warn when the heap usage in ElasticSearch is more than 80%. This example uses the promcat.io integration.

(elasticsearch_jvm_memory_used_bytes{area="heap"} / elasticsearch_jvm_memory_max_bytes{area="heap"}) * 100 > 80

Guidelines

Sysdig Monitor does not currently support the following:

Interact with the Prometheus alert manager or import alert manager configuration.

Provide the ability to use, copy, paste, and import predefined alert rules.

Convert the alert rules to map to the Sysdig alert editor.

Create a PromQL Alert

Set a meaningful name and description that help recipients easily identify the alert.

Set a Priority

Select a priority for the alert that you are creating. The supported priorities are High, Medium, Low, and Info. You can also view and sort events in the dashboard and explore UI, as well as sort them by severity.

Define a PromQL Alert

PromQL: Enter a valid PromQL expression. The query will be executed every minute. However, the alert will be triggered only if the query returns data for the specified duration.

In this example, you will be alerted when the rate of HTTP requests has doubled over the last 5 minutes.

Duration: Specify the time window for evaluating the alert condition in minutes, hour, or day. The alert will be triggered if the query returns data for the specified duration.

Define Notification

Notification Channels: Select from the configured notification channels in the list.

Re-notification Options: Set the time interval at which multiple alerts should be sent if the problem remains unresolved.

Notification Message & Events: Enter a subject and body. Optionally, you can choose an existing template for the body. Modify the subject, body, or both for the alert notification with a hyperlink, plain text, or dynamic variables.

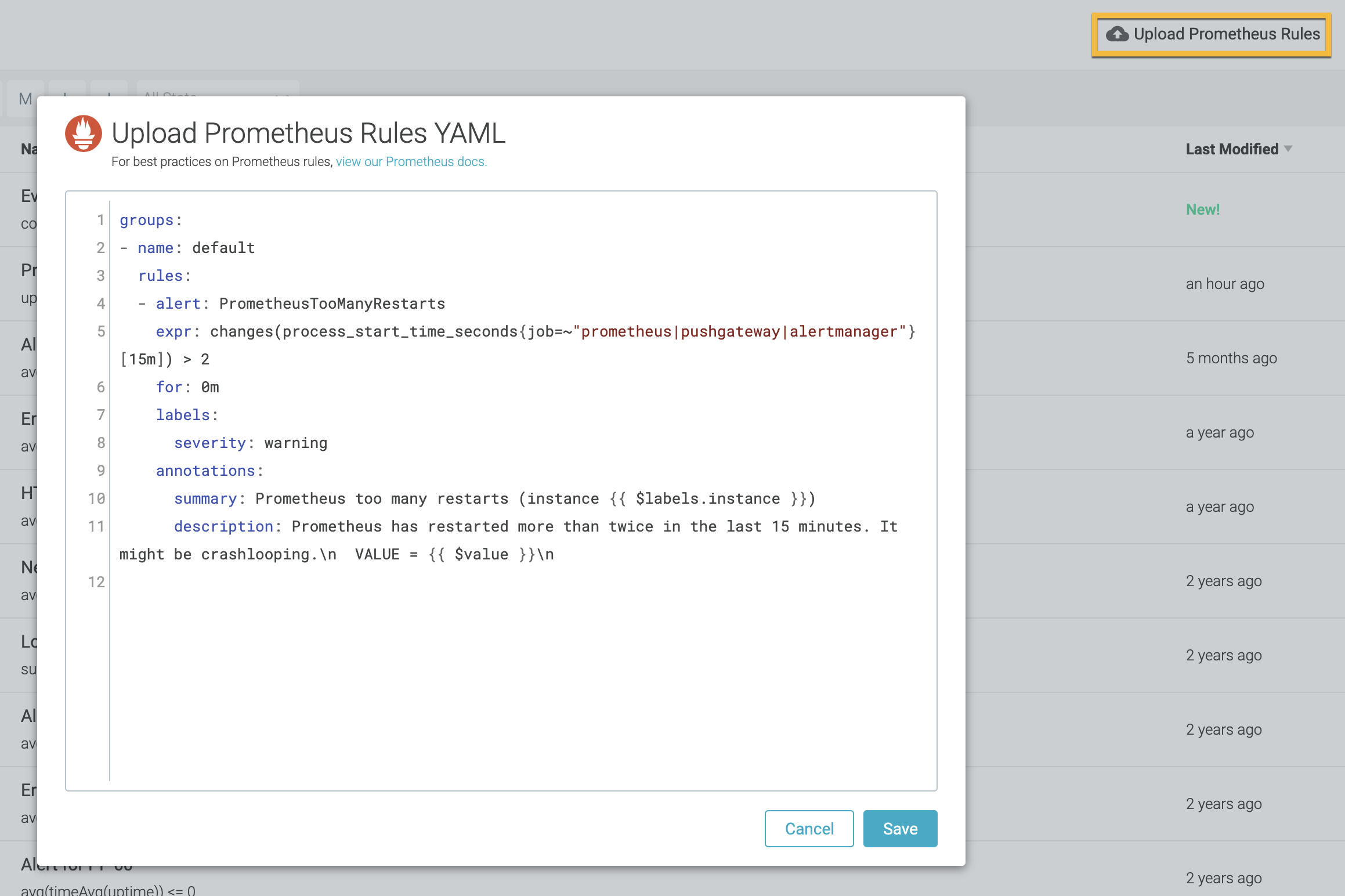

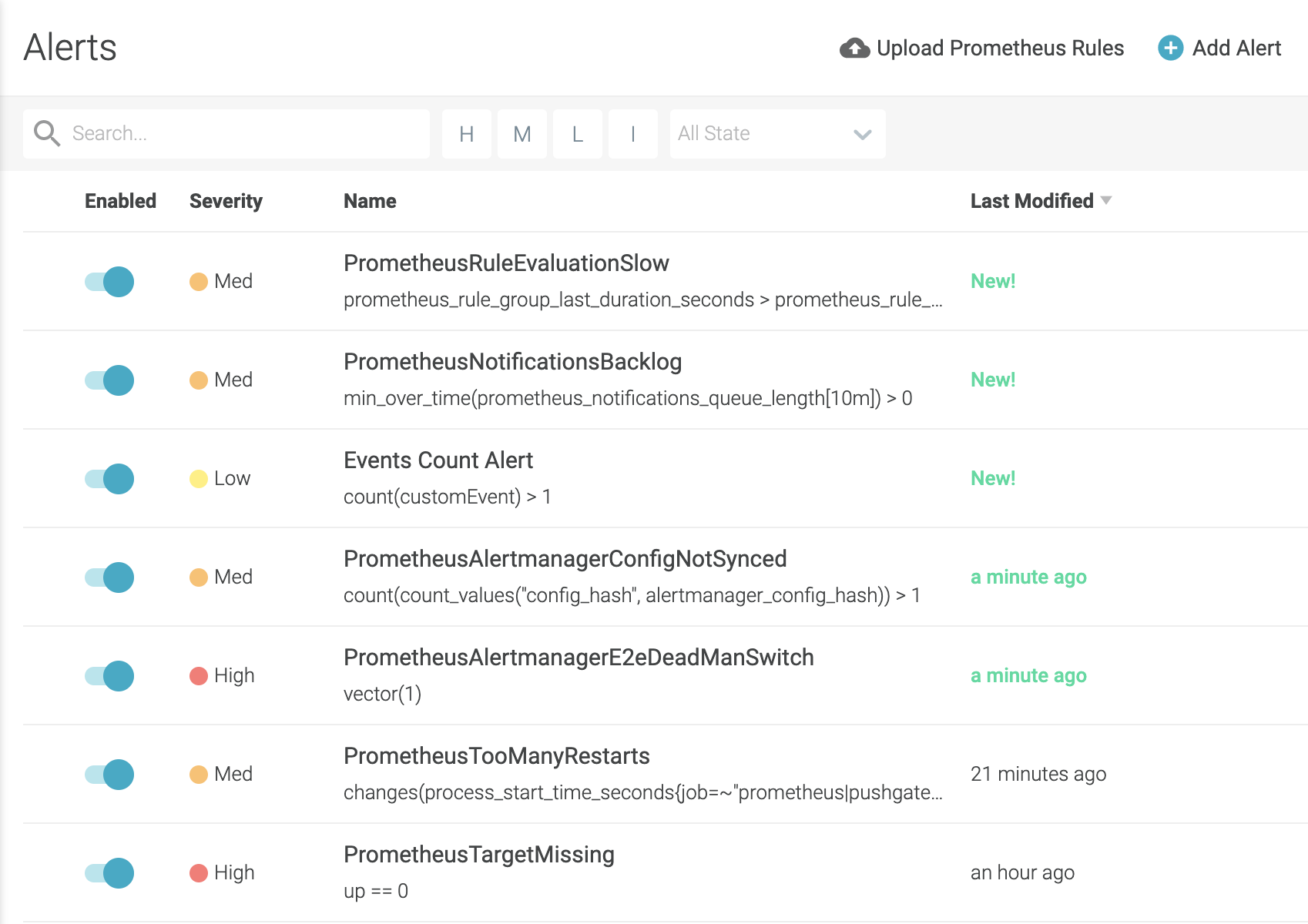

Import Prometheus Alert Rules

Sysdig Alert allows you to import Prometheus rules or create new rules on the fly and add them to the existing list of alerts. Click the Upload Prometheus Rules option and enter the rules as YAML in the Upload Prometheus Rules YAML editor. Importing your Prometheus alert rules will convert them to PromQL-based Sysdig alerts. Ensure that the alert rules are valid YAML.

You can upload one or more alert rules in a single YAML and create multiple alerts simultaneously.

Once the rules are imported to Sysdig Monitor, the alert list will be automatically sorted by last modified date.

Besides the pre-populated template, each rule specified in the Upload Prometheus Rules YAML editor requires the following fields:

alertexprfor

See the following examples to understand the format of Prometheus Rules YAML. Ensure that the alert rules are valid YAML to pass validation.

Example: Alert Prometheus Crash Looping

To alert potential Prometheus crash looping. Create a rule to alert when Prometheus restart more than twice in the last 10 minutes.

groups:

- name: crashlooping

rules:

- alert: PrometheusTooManyRestarts

expr: changes(process_start_time_seconds{job=~"prometheus|pushgateway|alertmanager"}[10m]) > 2

for: 0m

labels:

severity: warning

annotations:

summary: Prometheus too many restarts (instance {{ $labels.instance }})

description: Prometheus has restarted more than twice in the last 15 minutes. It might be crashlooping.\n VALUE = {{ $value }}\n

Example: Alert HTTP Error Rate

To alert HTTP requests with status 5xx (> 5%) or high latency:

groups:

- name: default

rules:

# Paste your rules here

- alert: NginxHighHttp5xxErrorRate

expr: sum(rate(nginx_http_requests_total{status=~"^5.."}[1m])) / sum(rate(nginx_http_requests_total[1m])) * 100 > 5

for: 1m

labels:

severity: critical

annotations:

summary: Nginx high HTTP 5xx error rate (instance {{ $labels.instance }})

description: Too many HTTP requests with status 5xx

- alert: NginxLatencyHigh

expr: histogram_quantile(0.99, sum(rate(nginx_http_request_duration_seconds_bucket[2m])) by (host, node)) > 3

for: 2m

labels:

severity: warning

annotations:

summary: Nginx latency high (instance {{ $labels.instance }})

description: Nginx p99 latency is higher than 3 seconds

Learn More

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.